Leveraging Deep Learning for Enhanced Image Processing

In this blog, we'll delve into how deep learning assists in tackling ill-posed problems encountered in medical imaging across healthcare and industrial contexts.

From the 1960s to 2000, CT and MRI technologies experienced significant advancements in spatial resolution. After 2000, the research emphasis transitioned to developing low-dose CT and fast MRI techniques, presenting challenges that are ill-posed for traditional mathematical methods.

Understanding the Mathematical Shift in MRI and CT

Before 2000, researchers mainly concentrated on solving well-posed problems that met basic rules, like the Nyquist criteria. The basic rule is that the number of measurements ($b$) should roughly match the number of unknowns ($x$), ensuring that the forward model $A$ can be inverted.

After 2000, researchers have increasingly turned their attention to tackling very ill-posed problems by using methods like sparse sensing. In this case where the number of measurements ($b$) falls far short of the unknowns ($x$), attempting to inverse the forward model $A$ tends to produce significant artifacts.

As an example of low-dose CT, dental CBCT aims to provide high-resolution images with the least radiation possible, along with reduced equipment and maintenance expenses.

MRI has limited clinical application due to the long data collection time. For pregnant women, the need for speedier MRI scans is increasingly critical as prolonged scanning times can lead to inaccuracies caused by fetal movements. Fast MRI aims to address this by efficiently reconstructing high-quality images from a smaller set of data points.

In essence, the goal is to lower the ratio of equations to unknowns (i.e., making $\frac{\text{# 𝐨𝐟 𝐞𝐪𝐮𝐚𝐭𝐢𝐨𝐧𝐬}}{\text{# 𝐨𝐟 𝐮𝐧𝐤𝐧𝐨𝐰𝐧𝐬}}$ as small as possible), aiming to decrease radiation exposure in CT scans and shorten data acquisition times in MRI procedures.

To tackle these ill-posed challenges in CT and MRI, the strategic employment of robust image priors is essential. The role of image priors in this context is described in the subsequent figure: When analyzing fetal ultrasound images, doctors leverage their anatomical knowledge to see beyond the visible image.

Deep Learning-based Solutions for Ill-Posed Problems

To transform the ill-posed problem to a well-posed one, we must extract Prior (x) from the training data. Traditional regularization approaches have limitations in utilization of prior knowledge that captures both local and global interconnections among grid elements into problem solving process.

In the 1950s, solving the ill-posed problem of local CT seemed impossible, primarily because of Radon's mathematical theory of CT.

In local CT, the challenge of ill-posed problems can be converted into well-posed situations by applying the prior knowledge that the discrepancy between the image reconstructed from fully sampled data and the image obtained from truncated data through filtered back projection can be mathematically characterized as analytic in a given direction. In this context, an image is classified as analytic in a given direction when the characteristics within any local region can accurately extrapolate the complete image along that direction.

When traditional methods are applied to the flowed Radon model, a local model mismatch in data can result in substantial global artifacts in the reconstruction. To simplify the explanation, let's examine a toy Radon model using bi-chromatic X-rays at 64 and 80 keV.

The figure illustrates how a local discrepancy can cause a global alteration, $A^*b$, in the process of mapping data into the range space, resulting in the generation of widespread artifacts. The figure below demonstrates that metallic implants introduce a notable discrepancy between the theoretical model and actual conditions, consequently causing the generation of severe artifacts.

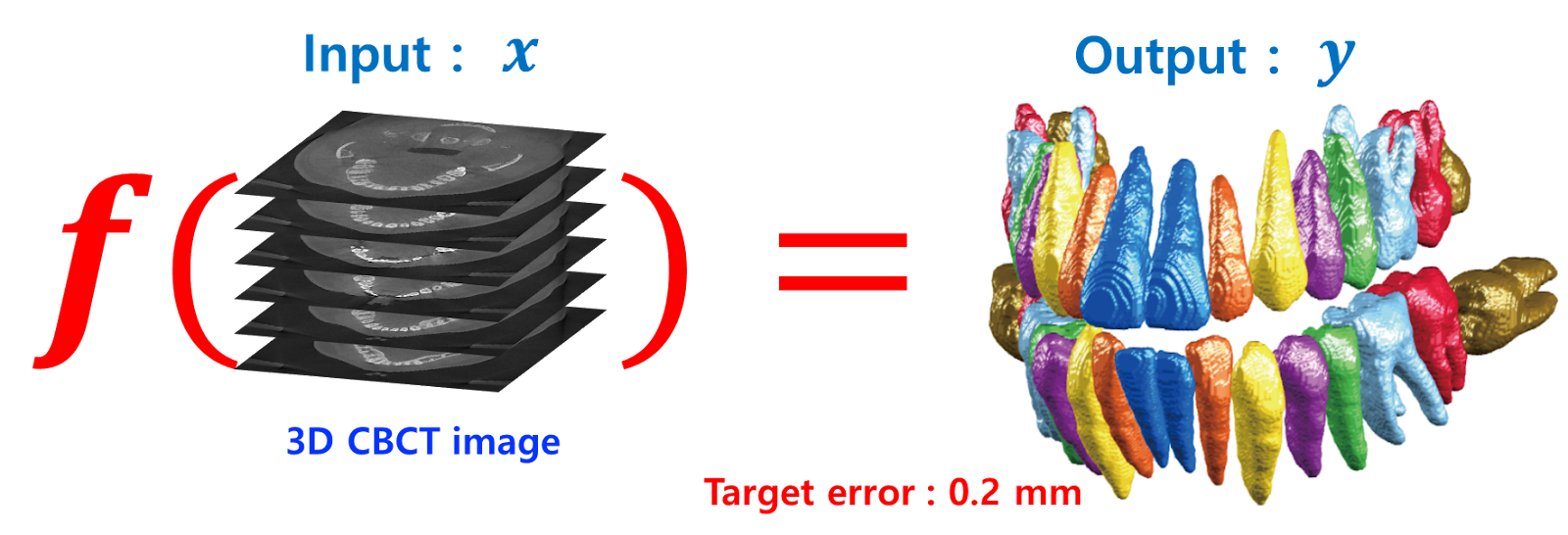

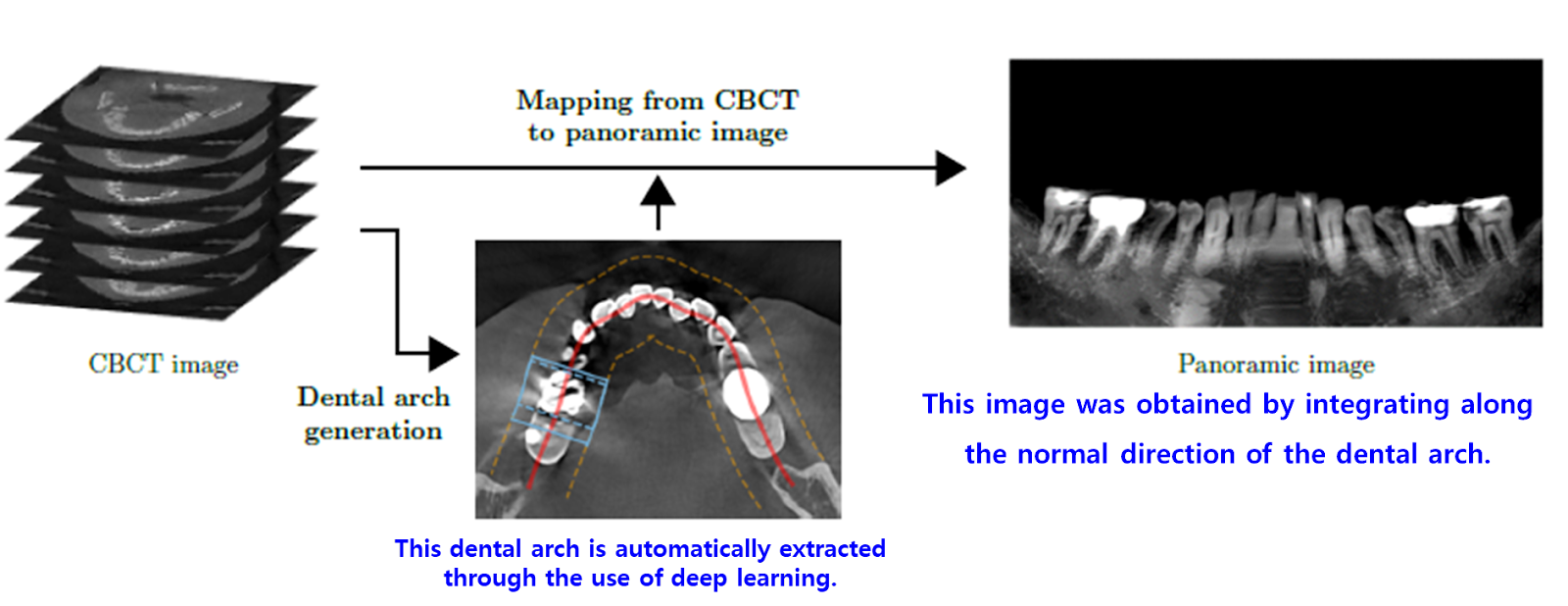

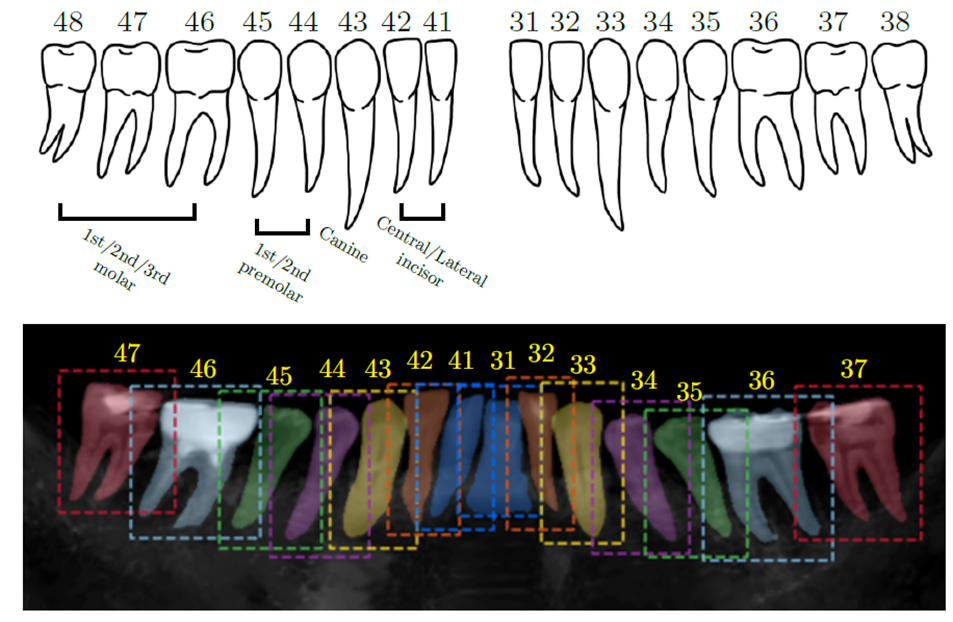

Recently, Tae Jun Jang et al., in their work "A fully automated method for 3D individual tooth identification and segmentation in dental CBCT," published in IEEE Transactions on Pattern Analysis and Machine Intelligence in 2021, achieved 3D tooth segmentation by effectively leveraging prior knowledge within deep learning frameworks.

The key idea involves generating panoramic images of both the upper and lower jaws from CBCT scans to serve as 3D tooth segmentation priors. This method benefits from the insight that panoramic images are relatively unaffected by metal-related artifacts.

Comments

Post a Comment