Leveraging Deep Learning for Enhanced Image Processing

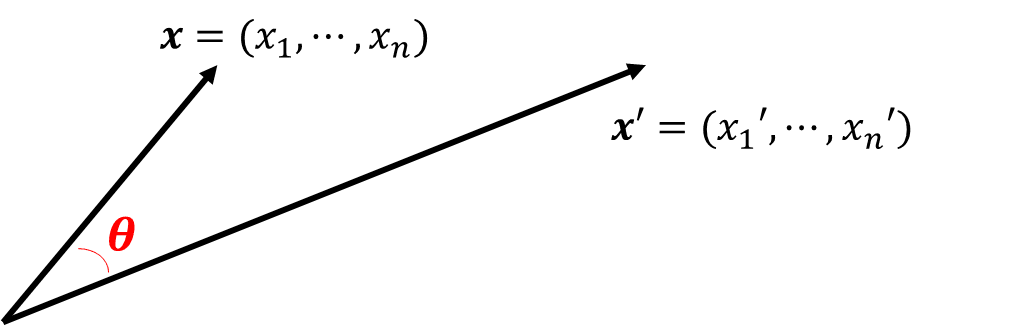

In this blog, we'll delve into how deep learning assists in tackling ill-posed problems encountered in medical imaging across healthcare and industrial contexts. From the 1960s to 2000, CT and MRI technologies experienced significant advancements in spatial resolution. After 2000, the research emphasis transitioned to developing low-dose CT and fast MRI techniques, presenting challenges that are ill-posed for traditional mathematical methods. Understanding the Mathematical Shift in MRI and CT Before 2000, researchers mainly concentrated on solving well-posed problems that met basic rules, like the Nyquist criteria. The basic rule is that the number of measurements ( $b$ ) should roughly match the number of unknowns ( $x$ ), ensuring that the forward model $A$ can be inverted. After 2000, researchers have increasingly turned their attention to tackling very ill-posed problems by using methods like sparse sensing. In this case where the numb...